LLMS

Can LLMs Spot Relationships in Arbitration Cases?

Large Language Models (LLMs) such as GPT-4o, Llama 3.1, and Claude 3.5 are powerful tools, widely discussed in the context of arbitration at almost every event I've attended. However, much of the focus has been on the regulation of AI usage. Stepping away from that topic, we decided to explore the practical value LLM models bring to the arbitration process, specifically in identifying parties, their roles, and relationships.

Experiment Overview

We conducted an experiment to determine if LLMs can effectively extract party names and their roles from arbitration submissions. This capability would help better understand the individuals involved and their relationships to the dispute.

Named Entity Extraction

Named entity extraction technology has been around for a while. Previously, smaller language models or regex parameters could extract the names of people. However, LLMs offer a significant advancement. They not only extract names but also go further, helping to understand the roles and relationships of the individuals involved.

Practical Approach

Following our practical rather than theoretical approach to AI use cases in arbitration, we experimented with an actual investment arbitration case to understand the individuals involved, their roles, and relationships. We used two documents: the Notice of Arbitration and the Claimant Memorial from the Windstream Energy LLC v. Government of Canada (I), PCA Case No. 2013-22 case, which is publicly available on ITA Law website.

Methodology

To analyze the documents, we used the well-known GPT-4o LLM model to assess its capability. The process included:

Prompting the Model: We asked the model to provide all the names in the provided documents.

Identifying Roles: We then prompted the model to identify the roles of those individuals and present them in a table.

Understanding Relationships: Lastly, we identified the relationships between the parties.

Findings

The initial list provided by the model was not comprehensive due to the model's fine-tuning for conversational outcomes and its tendency to not follow comprehensive instructions precisely. It required several additional prompts to extract the full list (see Table 1 below).

But we didn't stop there. We decided to take it a step further and see if the LLM model could identify the key individuals involved in the dispute, along with detailed explanations of their relationships. To our surprise, the model successfully identified all the critical individuals and provided relevant explanations. (see Table 2).

Conclusion

Although the LLM required multiple prompts to produce a complete list, it demonstrated its potential to extract names, roles, and relationships of individuals in arbitration submissions. This experiment highlights the practical utility of LLMs in improving the understanding of individuals and their relationships in arbitration cases.

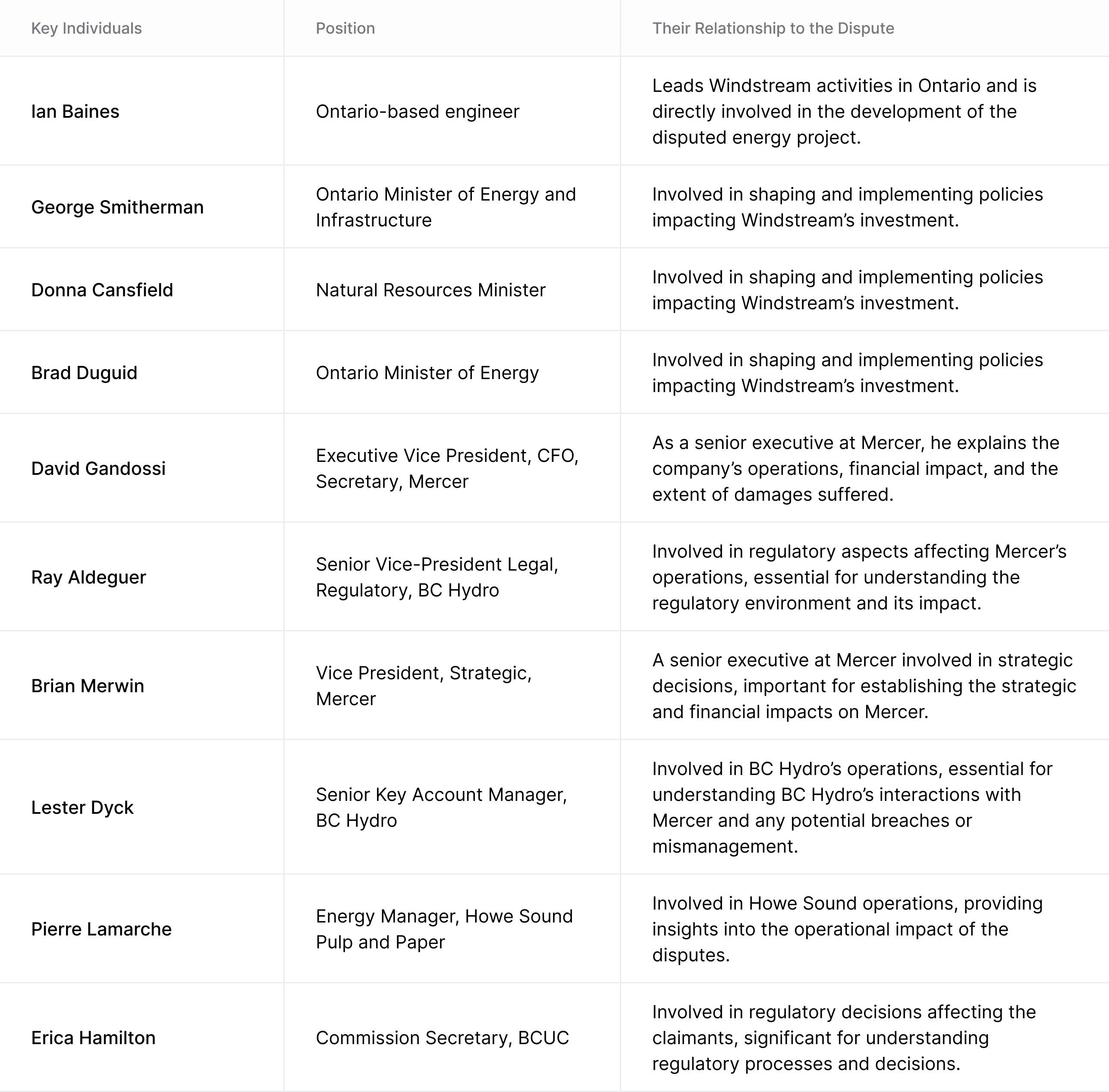

Table 1

Table 2