UI/UX

AI Models Can Make e-Discovery 10,000 Times More Affordable

Authors:

Artur Gasparyan

Aram Aghababyan

Introduction

The early stages of e-discovery (or disclosure in the UK) are often tedious and costly, involving the manual processing of vast amounts of electronic documents. Human reviewers filter, tag, and categorize documents, a labor-intensive process prone to error and fatigue. This drains resources and prolongs disputes, raising concerns about the accuracy and cost of traditional e-discovery. Notably, 70-80% of dispute costs are often attributed to the e-discovery process.

Because reviewing and tagging documents for confidentiality, privilege, and responsiveness is often considered straightforward, it is typically assigned to junior associates or trainees. To cut costs, some firms even outsource this work overseas. However, the variability in human review can be significant. For instance, one study found that when five groups were asked to identify relevant documents from the same set of 10,000, their results varied by 46% in determining which documents were responsive to the production request.

In this post, we assess how AI, particularly Large Language Models (LLMs), can transform this process, making it faster, more accurate, and significantly more affordable.

Can LLMs Revolutionize Document Review?

With the rise of AI, particularly Large Language Models (LLMs), many of these manual processes can now be automated. LLMs can rapidly process and analyze vast amounts of data, identify key documents, and auto-tag them based on predefined categories. This automation reduces human error, accelerates the review process, and allows legal teams to focus on more strategic tasks while ensuring accuracy and consistency.

It's important to note that this is not predictive coding or technology-assisted review (TAR), where humans must first review and tag thousands of documents. With the advent of AI models, the entire process can be completed automatically, without the need for human-tagged samples.

The Human Cost of Traditional e-Discovery

E-discovery costs can vary widely based on several factors, including the experience level of the lawyers involved, the complexity of the case, and the geographical location. Typically, more experienced lawyers command higher hourly rates, especially when dealing with intricate cases that require specialized knowledge.

In the UK, while the process is similar, rates are adjusted according to the local currency and specific location. Larger firms situated in major cities often charge premium rates due to their reputation and the higher cost of operating in these areas.

To manage costs, many law firms choose to outsource e-discovery tasks to specialized providers. This strategy allows their lawyers to concentrate on more critical, value-added activities, ensuring that their time is used efficiently. However, when outsourcing, firms must be vigilant about maintaining data security and ensuring compliance with relevant regulations, as these factors are crucial in protecting client confidentiality and avoiding potential legal pitfalls.

The table below showcases the hourly rates for e-discovery conducted by different types of lawyers or through an outsourcing provider.

Document Review Capacity

To calculate costs, we have provided a breakdown of a typical e-Discovery set, including the number of documents a junior associate can review and the cost per document for law firms.

Document Review Capacity: Comparing Human vs. LLM

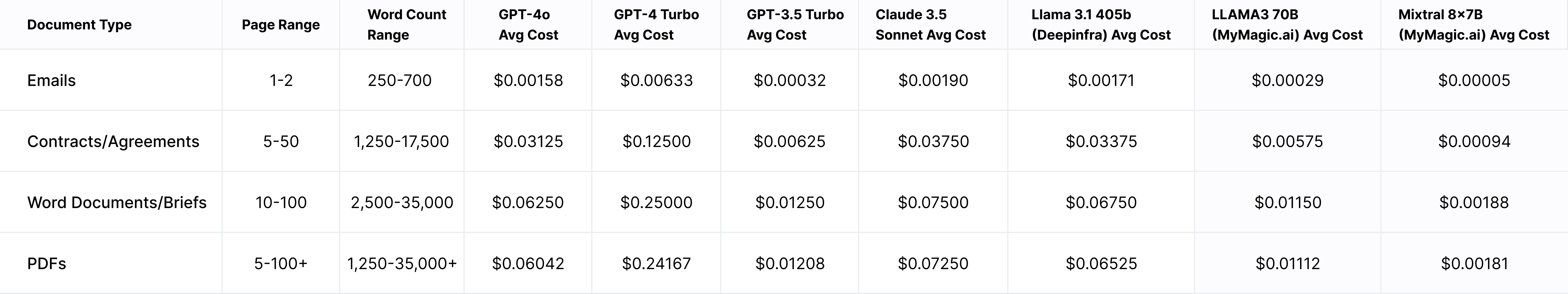

To illustrate the cost differences between manual review and LLM-powered review, we've broken down typical document types and the associated costs with various LLM providers. The table below highlights the average costs per document based on word count, allowing for a direct comparison.

Please note that some generalization is applied here, and we are only considering input tokens. Typically, LLM providers charge for both input and output tokens, but since we will only ask the model to tag, no significant distinction is made and for simplification, we disregarded the output token costs.

Real-Time vs Batch Inference: Cost Analysis

When it comes to the e-discovery process, it’s not a time-sensitive task by definition, meaning it does not require the LLM to start generating a response in milliseconds (this metric is known as a time-to-first token). Instead, throughput is the more important factor to consider. This distinction highlights a crucial decision for legal teams: choosing between traditional LLM providers and newer, more specialized options like MyMagic AI.

Traditional providers, such as OpenAI and Anthropic, excel in real-time inference, which is ideal for applications requiring immediate feedback. However, this capability often comes with significantly higher costs—sometimes up to 5-10 times more expensive per token—making it less practical for tasks like e-discovery where the focus is on processing large datasets efficiently.

In contrast, MyMagic AI has pioneered the use of batch inference, processing data in bulk rather than in real-time. This approach is particularly well-suited for e-discovery, where large volumes of data need to be reviewed accurately but not instantly. By utilizing batch processing, MyMagic AI can offer costs that are substantially lower—often by 50% to 90%—compared to traditional real-time inference providers. Their infrastructure, supporting open-source LLMs like Llama and Mistral, leverages continuous batching and parallel computing to deliver high throughput at a fraction of the cost.

The diagram below illustrates how the e-discovery process would operate using batch inference. It outlines each step, from raw data cleanup to the application of AI-based tagging for privilege, confidentiality, and responsiveness, culminating in the final output format.

Incorporating a provider like MyMagic AI into the e-discovery process not only dramatically reduces costs but also allows legal teams to process large amounts of data efficiently, without compromising on accuracy or speed.

Conclusion

The evolution of e-discovery, from manual human review to the integration of Large Language Models (LLMs), represents a significant leap forward in both efficiency and cost-effectiveness. While human reviewers have traditionally been the backbone of this process, the introduction of LLMs offers a compelling alternative that not only reduces costs but also mitigates the risks associated with human error. By automating document review, LLMs allow legal teams to allocate their resources more strategically, focusing on higher-value tasks that require human judgment and expertise.

In a world where legal proceedings are increasingly complex and data-intensive, the adoption of LLMs in e-discovery is not just a cost-saving measure—it’s a strategic advantage. As the technology continues to evolve, we can expect even greater efficiencies and more accurate outcomes, making LLMs an indispensable tool for modern legal practices.

Sources

Daniel R. Rizzolo, "Legal Privilege and the High Cost of Electronic Discovery in the United States: Should We Be Thinking Like Lawyers? https://sas-space.sas.ac.uk/5246/1/1882-2627-1-SM.pdf

LLM Provider Costs Comparison by Docsbot: https://docsbot.ai/tools/gpt-openai-api-pricing-calculator

Robert Half, 2024 Salary Guide: Legal Salaries and Hiring Trends

https://www.roberthalf.com/us/en/insights/salary-guide/legal

Legal Salaries on the Rise? That’s the Half of It: eDiscovery Trends

https://cloudnine.com/ediscoverydaily/electronic-discovery/legal-salaries-on-the-rise-thats-the-half-of-it-ediscovery-trends